Eliminate Duplicate Content if You Want to Boost Your SEO

What is Duplicate Content?

Let’s start with exactly what we mean by duplicate content. Duplicate content refers to identical or very similar content that appears on multiple pages, or URLs of a website or across multiple websites. This can be a problem for search engines because it can make it difficult for them to determine which version of the content is most relevant to a particular search query, therefore the search engines do not know which page, or URL, to list first, or higher on the search results. The search engines may even rank the duplicate pages low and give higher ranking to other pages.

Similarly, too much duplicate content can cause issues with website ranking, as search engines may simply penalise websites for having too much duplicate content. To avoid this problem, it’s important to ensure that all the content on a website is unique and relevant to the topic at hand.

How Does Duplicate Content Affect Website SEO?

Duplicate content can negatively affect a website’s ranking in search engines in several ways.

As discussed above, search engines may have a harder time determining which version of the content is most relevant to a particular search query, which can result in lower rankings for the website. Also, search engines may penalise websites for having too much duplicate content by lowering their rankings or even removing them from the search results altogether.

It can dilute the back link value of a website. If the same content is appearing on multiple pages or websites, the links pointing to those pages will not be as valuable as if they were pointing to unique content.

It can lead to a poor user experience, as search engines may present multiple versions of the same content to users, which can be confusing and frustrating.

Search engines also use metrics like click-through rate (CTR) and bounce rate to determine the relevance of a webpage, if the same content is accessible through multiple URLs, the metrics will be divided among those URLs, which will lead to lower CTR and higher bounce rate.

Overall, having duplicate content on your website can confuse search engines and make it difficult for them to determine the relevance and value of your pages, which can lead to lower rankings and reduced visibility in search results.

To avoid these issues, it’s important to ensure that all the content on a website is unique and relevant to the topic at hand.

Can Duplicate Content Affect the User Experience?

In short, yes. Looking closer at how the user experience can be adversely affected through duplicate content, this can happen in several ways:

- Confusion: When users come across multiple versions of the same content, they may become confused about which version is the most accurate or up to date. This can lead to frustration and mistrust on the website.

- Loosing trust: When users find the same content on multiple websites, they may question the originality and authenticity of the content, which can lead to reduced trust in those websites. And, of course, users will not necessarily know which website originated the content.

- Poor navigation: When duplicate content is present on a website, it can make it difficult for users to navigate the site and find the information they are looking for. As well as confusion and losing trust this can lead to increased bounce rates and reduced engagement with the website.

- Reduced engagement: When users come across duplicate content, they may lose interest in the website and move on to a different site. This can lead to reduced engagement and fewer conversions.

- Reduced relevance: Search engines may present multiple versions of the same content to users, which can be confusing and frustrating. The user may end up with irrelevant search results, which can lead to poor user experience.

So, duplicate content can lead to a poor user experience and reduced trust on the website, which can lead to lower engagement and reduced conversions. It can also make it difficult for users to find the information they are looking for and can lead to confusion and frustration.

Does Google have a duplicate content policy?

You may have read that google has a duplicate content policy, there are many articles discussing this, 3.9Bn results in fact when searching for “Does Google have a duplicate content policy?”

However, at the time of writing, we cannot find any such policy within Google’s Privacy and Terms. There are, however, many hints and parallel conditions that are designed to ensure that search results are as relevant and useful as possible for users.

What we did find is this quote –

“To be eligible to appear in Google web search results (web pages, images, videos, news content or other material that Google finds from across the web), content shouldn’t violate Google Search’s overall policies or the spam policies”

And inside these policies you find – Google’s Spam Policy, where we find a policy on ‘Scraped content’ –

“Some site owners base their sites around content taken (“scraped”) from other, often more reputable sites. Scraped content, even from high quality sources, without additional useful services or content provided by your site may not provide added value to users. It may also constitute copyright infringement. A site may also be demoted if a significant number of valid legal removal requests have been received. Examples of abusive scraping include:

- Sites that copy and republish content from other sites without adding any original content or value, or even citing the original source

- Sites that copy content from other sites, modify it only slightly (for example, by substituting synonyms or using automated techniques), and republish it

- Sites that reproduce content feeds from other sites without providing some type of unique benefit to the user

- Sites dedicated to embedding or compiling content, such as videos, images, or other media from other sites, without substantial added value to the user”

So, duplicate policy or not, duplicate content will have a negative effect on your website rankings.

Finally, when you see patents for “Duplicate/near duplicate detection…” you can conclude Google are pro-actively looking for duplicate content – and it won’t be so they can rank it higher! We can have some confidence that Google’s algorithm is able to detect duplicate content.

While, in general, Google does not penalize websites for having duplicate content, it may choose not to index or show all the duplicate content in its search results. This can make it difficult for a website with significant amounts of duplicate content to rank well in search results. To avoid this problem, it’s important to ensure that all the content on a website is unique and relevant to the topic at hand.

How Could You End up With Duplicate Content?

Google does not blindly penalise websites for duplicates, because duplicate content can happen in a variety of innocent ways, it doesn’t always happen due to malicious intent. Some ways include:

- Content syndication: When a website republishes content from another website without making any changes or modifications.

- Multiple URLs: When the same content is accessible through multiple URLs, such as through both www and non-www versions of a website or through HTTP and HTTPS versions.

- Printer-friendly pages: When a website has printer-friendly versions of pages that are identical to the original pages.

- Session ID’s: Some websites use session IDs to track user behaviour, which can result in the same content being accessible through multiple URLs.

- Product descriptions: Many e-commerce websites use the same product descriptions for multiple products, which can lead to duplicate content issues.

- Blogs and forums: Many blogs and forums use the same content across multiple pages, which can lead to duplicate content issues.

Duplicate content could also be ‘scraped’ content, when a website copies content from another website and republishes it as their own, such content IS a candidate for search engine penalties.

To avoid these issues, it’s important to ensure that all of the content on a website is unique and relevant to the topic at hand, and to use the rel=”canonical” tag to indicate to search engines which version of the content should be considered the original and the version to be indexed. Additionally, regularly monitoring your website for duplicate content and taking action to remove or fix it can help prevent negative impacts on your website’s SEO.

Usually, a website owner won’t purposely create duplicate content. That’s why Google doesn’t penalise it blindly. There’s also the difference between copied content and duplicate content.

What is the Difference Between Duplicate and Copied Content?

Copied content and duplicate content are similar in that they both refer to content that appears on multiple pages or websites, but there is a subtle difference between the two terms.

Copied content refers to content that is taken from another website or source and republished on a different website without permission or attribution. This is considered plagiarism and is generally considered to be a violation of copyright law.

Duplicate content, on the other hand, refers to identical or very similar content that appears on multiple pages of a website or across multiple websites. This can happen for a variety of reasons, such as content syndication, session ID’s, and multiple URLs. While duplicate content is not illegal, it can still be a problem for search engines and can negatively impact a website’s SEO.

In summary, copied content is illegal and a form of plagiarism, while duplicate content is not illegal, but it can still negatively impact a website’s SEO.

How do You Find Duplicate Content?

There are a few ways to find duplicate content on a website:

- Use a website crawler tool: A website crawler, also known as a spider, or bot, is a software tool that will automatically visit and scan your website to gather information on your websites structure, content, and links, and they can identify any duplicate content it finds.

- Use a plagiarism detection tool: A plagiarism detection tool can be used to find duplicate content across multiple websites. It is a software tool that compares documents, or content, against a database of content that has been accumulated by, itself, crawling the web and indexing content.

- Use Google Search Console: Google Search Console allows you to see which pages on your website are being indexed by Google. You can use this information to identify any duplicate pages that may be causing issues with your website’s SEO.

- Use a spreadsheet: Manually comparing the content of each page of your website can also be a good way to find duplicate content. You can use a spreadsheet to organize and compare the content of each page to identify any duplicates.

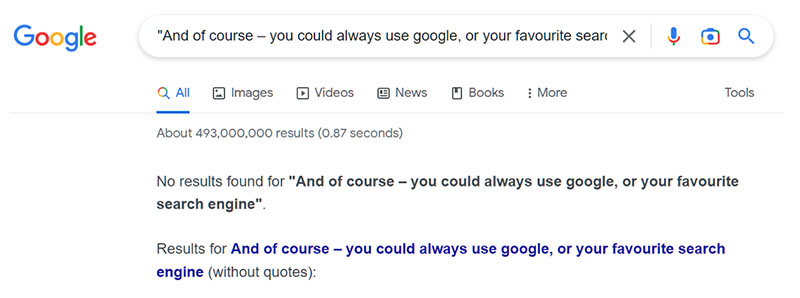

And of course – for a nice simple, and quick check, you could always use Google, or your favourite search engine. Try copying 10-15 words from a sentence on a page, then paste it within “quotes” into a google search.

It’s important to note that, finding duplicate content on your website is just the first step, it’s also important to take action to fix it.

How do You Fix Duplicate Content?

To avoid creating duplicate content, you can follow these tips:

- Use rel=”canonical” tag: This tag tells search engines which version of the content should be considered the original and therefore which version will be indexed and presented in search results. By adding the rel=”canonical” tag to the duplicate pages, you can specify which page should be indexed, and prevent any negative impact on your website’s SEO. We leave canonical tag’s here, as this is a technical one, and we will explain the ins and outs of canonical tags in a future planned article.

- Use 301 redirects: If you have duplicate pages on your website, you can use 301 redirects to redirect users and search engines from the duplicate page to the original page. This can help to consolidate link value and prevent any negative impact on your website’s SEO. Once again – we will explain more about 301 redirects in a future planned article.

- Use noindex meta tags: To stop search engines from indexing the content of a page. Once again – we will explain more about noindex meta tags in a future planned article.

- Use unique and relevant content: Creating unique and relevant content for your website ensures you don’t create duplicate content and avoids the duplicate content issues. In creating your content, avoid copying and pasting content from other websites, and rule number 1 – make sure that all the content on your website is relevant to your page topic.

- Use consistent URLs: Use consistent URLs for your website, like using https instead of http, using www or non-www, and avoid using session ID’s.

- Check for duplicate content regularly: Regularly monitoring your website for duplicate content and taking action to remove or fix it can help prevent negative impacts on your website’s SEO.

- Use structured data: Use structured data (such as schema.org) to help search engines understand the context of your content and reduce the risk of duplicate content. We leave schema.org here, as this is another technical one, and we will explain more about schema.org in a future planned article, – you really should.

- Be aware of cross-domain duplication: This is where the same content appears on multiple websites each with a different URL. If you are syndicating your content, be sure you do this in a way that retains the original URLs and allows search engines to work out what the relationship is between the original content source and what will be the syndicated content.

By following these tips, you can help to prevent duplicate content issues and ensure that your website’s SEO is not negatively impacted.

It’s important to note that, even if you fix the duplicate content on your website, it may take some time for search engines to re-crawl your site and update their index. Additionally, if the duplicate content is on another website, you will have to contact the webmaster of that site to have it removed.

In Summary

Duplicate content is identical or very similar content that appears on multiple pages of a website or across multiple websites. This can negatively affect a website’s SEO by making it difficult for search engines to determine which version of the content is most relevant to a particular search query or search intent, thereby ranking a website lower, reducing the ‘link’ value of a website, and leading to a poor user experience.

Search engines ‘may’ penalise websites for having too much duplicate content by lowering their rankings or even removing them from the search results. To fix duplicate content, you can use a rel=”canonical” tag, use 301 redirects, use the noindex meta tag, remove or replace it with unique content and monitor your website for any new duplicate content and act as soon as you find it. To find duplicate content, you can use a website crawler tool, a plagiarism detection tool, Google Search Console, a spreadsheet, a tool that checks for duplicate content within your website, or a simple google search.

Can We Help?

There is a lot to take in when thinking about SEO and it can often become overwhelming, even for the more technically minded, so you may be looking for some help.

The articles published on the social:definition website are about everyday subjects that we deal with. We’ve done all the hard work, read the documents, and put everything into practice. We’ve learned a lot ourselves through continued research, our own development and practical experience. All this experience goes into the work we carry out for our clients, so, if we can help, we’d be only too happy to.

You can contact us directly through the form on this page or using any of the details throughout our website.

Article Contents